A couple of weeks ago I presented the topic of linked lists to my colleagues. The reason for that is that some of our colleagues don’t have a computer science background and they never learned it, even though they are software developers, but also because it was a bit forgotten to some of the others.

The way that I introduced the topic was with the problem of finding anagrams of a word. The problem is very simple, we have a dictionary of English words in a file and we want to find all the anagrams of a given word that are on that file.

If we would want to do this in standard C programming we would have a problem. How many words are in the file? How big should the array be? Would an array of 1000 strings be enough? Maybe 100000? Of course we could use a command line utility like wc to count the words in the file but that would defeat the purpose of me trying to teach the problem.

The problem here is that our program might not be able to even load the whole file or it might waste too much memory if we create an array that is too big for our purposes.

The concept of Node

In 1955 a solution to this problem was developed by Allen Newell, Cliff Shaw and Herbert A. Simon.

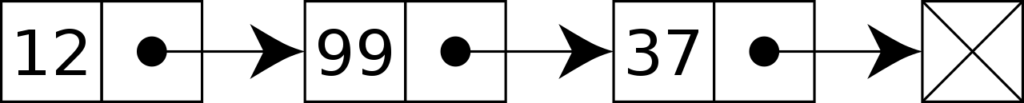

It consists of having the concept of a Node where you have the data that you want to store and a reference to another node.

It would be presented like this:

Since my team is working on mobile software development in both Android and iOS and I’m working on Android, I used the Kotlin programming language to drive the examples.

This could be represented in Kotlin like this:

class Node<T>(val data: T, val next: Node?)Having that class, we just need to implement a function that can add a node to another node. Could be something like this:

fun <T> add(data: T, node: Node?): Node =

if (node == null) Node(data, null)

else Node(node.data, add(data, node.next))Having that we can easily load all words from the file and we can also create another function to count how many words are there:

fun <T> size(node: Node?): Int =

if (node == null) 0 else 1 + size(node.next)Very simple and straightforward recursive code.

StackOverflowError

When we try to run this we get a StackOverflowError, why?

Exception in thread "main" java.lang.StackOverflowError

at zanagram.AppKt.add(App.kt:13)

at zanagram.AppKt.add(App.kt:13)

at zanagram.AppKt.add(App.kt:13)

at zanagram.AppKt.add(App.kt:13)

at zanagram.AppKt.add(App.kt:13)

at zanagram.AppKt.add(App.kt:13)The problem is that we’re probably dealing with a very large number of words so the recursive calls overflow the stack.

Tail recursion

One solution to this stack overflow problem would be to be able to eliminate the recursion but still write in a recursive style. This is possible by using tail recursion. Fortunately Kotlin supports tail recursion by using the tailrec modifier so we could write our size function like this:

fun <T> size(node: Node?): Int = size(node, 0)

tailrec fun <T> size(node: Node?, acc: Int): Int =

if (node == null) acc else size(node.next, 1 + acc)If you’re not familiar with tail call optimization you can read about it on Wikipedia.

The add function is a bit more complicated and will need to create another function to reverse the list.

fun <T> add(data: T, node: Node<T>?): Node<T>? =

add(data, node, null)

tailrec fun <T> add(

data: T, node: Node<T>?, acc: Node<T>?

): Node<T>? =

if (node == null) reverse(Node(data, acc))

else add(data, node.next, Node(node.data, acc))

fun <T> reverse(node: Node<T>?): Node<T>? =

reverse(node, null)

tailrec fun <T> reverse(

node: Node<T>?, acc: Node<T>?

): Node<T>? =

if (node == null) acc

else reverse(node.next, Node(node.data, acc))Having these functions we can finally run our program.

On my machine, after 18 minutes I get the result.

Performance Analysis

Why would such a simple problem take so long to execute?

A naïve answer that I get frequently is that it is because of recursion. It’s a naïve answer because of the failure to realize that it’s only written in a recursive style. Since it’s tail-call optimized, in reality it’s not really recursive after the compilation process.

To prove that it’s definitely not because of recursion, let’s rewrite our code to not be recursive at all at see if there is any difference.

For this we need to change our Node class to not be immutable anymore by using var instead of val.

class Node<T>(val data: T, var next: Node?)Having this, our add and size functions would look like this:

fun add(data: T, node: Node?): Node {

if (node == null) return Node(data, null)

var n = node

while (n.next != null) n = n.next

n.next = Node(data, null)

return node

}

fun size(node: Node?): Int {

if (node == null) return 0

var size = 0

var n = node

while (n != null) {

size++

n = n.next

}

return size

}It’s very obvious from this new implementation that we lost a lot of the expressiveness, beauty and simplicity of a recursive function call but let’s see now the impact in the overall performance.

This time the program runs in 16 minutes.

Where did this 2 minute performance improvement came from?

Performance impact of immutability

If you look carefully, the performance impact didn’t come from the removal of recursion but from the fact that we’re not using an immutable data structure anymore. In our previous recursive implementation we’re always creating a completely new list every time we want to add a new element. This is obviously sub-optimal and we should definitely use the non immutable version.

The takeaway here is that for this specific problem, the overhead of always creating a completely new list makes no sense at all. Two minutes of computation is a lot of time!

Big-O Notation and Complexity Analysis

These 16 minutes are obviously still not acceptable, it’s just way too slow. We need to attack the problem from a different perspective.

What is the time complexity of adding to the end of a linked list? Since we’re adding to the end, we always have to move through all the previous elements to reach the end and add a new node there. This means that the time complexity of our add function is O(n).

If we have an O(n) operation that we have to repeat n times, we’re dealing with an operation that will be O(n^2). Every time that you have a big problem, an O(n^2) approach will just be too slow.

From O(n^2) to O(n)

What if we add at the beginning of the list instead of at the end?

You can immediately notice that this would end up giving us the words in reverse order, which we actually don’t care since we have to search all of them anyway but even if we would care, we could reverse the list afterwards.

Adding at the beginning is a constant time O(1) operation. Since we have to repeat this operation for all words we end up with an O(n) operation.

Reversing the list is also an O(n) operation, which would give us an O(n) + O(n) = O(2n) = O(n) operation.

This shows mathematically that even with the extra cost of reversing the whole list, it would still be faster than always adding to the end of the list.

We can easily verify the mathematical explanation by running the code and see that it now finishes in 157 milliseconds.

We have 466551 words in our file.

Finding a word with a linear search

Now that we can load the dictionary in a totally acceptable amount of time, let’s see how we can find a word in the list.

tailrec fun <T> contains(data: T, node: Node?): Boolean =

when {

node == null -> false

node.data == data -> true

else -> contains(data, node.next)

}Here we’re going through all the words in the list until we find our word. If we reach the end, our word is not there.

Improving the performance of finding a word

How could we improve the performance of finding a word?

One possibility would be to sort all the words. If the words are sorted we don’t need to search through all of them just like we would do on a paper dictionary, we could check the word in the middle and decide if we should continue searching left or right. The problem is that a linked list data structure doesn’t allow us access to the middle element.

Dynamic Array instead of Linked List

Since our linked list doesn’t serve our purpose we will study another data structure called a dynamic array. But that’s the topic of another article.

Thanks for reading! If you want to read the next article about the dynamic array, please click here.

Thumbs up, cool article